I have recently joked that a lot of the AI stuff I’ve seen and read on LinkedIn has been slop, but I think there’s a real value in telling these people off. Especially the people who think it’s going to completely replace competent people.

The above tweet is an example of that. Someone wrote “these people are the enemy,” and if we’re being honest I think that kind of framing, while inflammatory, isn’t wholly off the mark.

There is no moral virtue in being lazy or not. But that’s what this is. It’s laziness. Intellectual sloth and incuriosity on a systemic level. In the example above, it’s someone who wants a specific answer but is unwilling to encounter anything adjacent to it that might encourage accidental learning.

People are touting AI agents as this thing that’s gonna revolutionize how we absorb information, shop, make reservations, etc. Basically how we live.

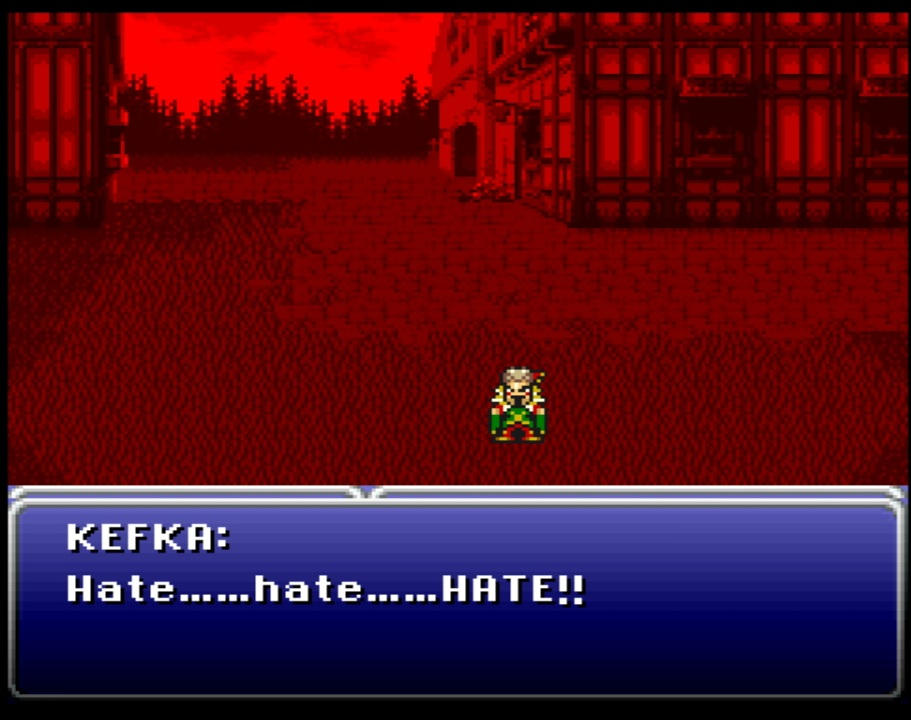

I hate it. I hate it all. I want to smash my computer with a hammer when I learn about it. I understand the joke from Parks and Recreation where Ron Swanson carries his computer to the dumpster with an intimate familiarity that borders on the parasocial.

Because when it comes to reading or writing or making dinner reservations or ordering flowers for our spouses or planning birthday parties for our kids…What’s the point? What’s the point of any of this if we outsource our experiences to a computer? If I don’t internalize my wife’s favorite flowers or my kid’s current obsession, am I truly invested and present in their lives?

Probably not.

Having a computer do that work, or the work of research at all (and then lie to you about the things that it’s read or made up out of whole cloth) robs you of your very literacy. Reading a text and wrestling with its ideas—and learning how to summarize it yourself—is fundamental to the act of storytelling, both creation and consumption.

And speaking of creation, why bother learning why putting words, one in front of the other, is such a rewarding experience? What good is poetry if no one stops and thoughtfully considers how the syllables fall into place, juxtaposed against one another and bouncing off the tongue as you read it aloud?

Why bother learning about the importance of framing a photo or shot or composition if you can just say, “Make a picture of my dog that looks like he’s in a Wes Anderson movie?” without understanding why Anderson makes the aesthetic choices he does?

And for the more mundane things that AI is supposed to fix, maybe there’s an argument that it shouldn’t? Waiting on hold is an exercise in emotional regulation and patience. Going to a store and buying things from a sales associate teaches empathy at best and helps you think about things you never considered. I don’t want to read AI summaries of reviews. I want to read the actual reviews from the people who have bought and loved or hated the thing they purchased.

We are in such a race to the bottom of efficiency with this stuff—stuff that has a wildly destructive environmental impact. What is the point of making the tasks we dread faster if it ruins the planet and robs us of the ability to do those tasks anyway? It’s like that joke about millennials and the phone—I HATE the shit out of making phone calls to make appointments and would rather do it online, but at least I know HOW to make a phone call and perform the basics of telephone etiquette.

I won’t argue that sometimes it would be nice to have more time, but as we’ve seen the “efficiencies” so many of our companies are chasing with heavy-handed AI usage are never passed down to workers. We’re not saving time so that we can have six-hour workdays or four-day workweeks. The stuff that we’re ostensibly supposed to be making that time for.

No. Instead, we’re saving time so we can do MORE work for MORE clients to make MORE money, but not for us, no, but our c-suite leaders.

And none of this even begins to approach the other problems with LLMs, like the propensity of people to fall into psychosis through continuous use.

I wanted so badly for LLMs to make my life as a content marketer easier, and in many respects I guess it still has that potential. I could use it to write outlines, or even first drafts, but I can tell a huge difference when I sit down to write something after having heavily leaned on it for work tasks. Writing introductions is harder. Synthesizing my ideas, too. Finding the right word isn’t as simple as it used to be.

And for someone who prides themselves on authenticity in writing—whether that’s a client blog post or some shit I came up with and typed in the Notes app of my phone after reading the Tweet at the beginning of this post—finding the right words is where the work starts.

Not with a prompt. Not with a robot doing the heavy lifting.

I’ll be honest: When I sat down to write this (on my couch with a cup of coffee), I didn’t really have an end in mind. That’s why it’s so abrupt. It’s a post. But maybe there’s something in there worth thinking about.